Recently, I came across the Saga pattern when I stumbled upon this video from last year’s Goto conference. In the video, Caitie McCaffrey talks about how Saga pattern can (also) be applied to distributed systems and transactions spanning several endpoints/process boundaries.

For a new IoT project, we’ve been looking for solutions for transaction and failure management in cloud based distributed systems. That’s when we realized that saga pattern can be a good choice. The solution is being built using the Microsoft Azure stack (IoT hub, Service Fabric, DocumentDb, Web Apps, Azure Redis Cache, among other things). The solution utilizes reliable stateful services available as part of Azure Service Fabric along with the saga pattern to manage workflows and failures.

The problem statement Link to heading

The scenario is a typical IoT message/request processing scenario. A message comes into the system through one of the ingress points and then it has to be processed — think messages coming from IoT devices. On the other side, a request comes from the user and it has to be processed — think account management, device data queries, etc. During the processing, there could be several sub-tasks that need to be performed across several process boundaries and endpoints. Some of these could be database operations, external service calls, in-memory calculations, etc. What if some of these sub-tasks failed? Would the system still be in a consistent state? Should we leave it like that?

Saga Pattern Link to heading

The saga pattern is more of a failure management pattern, as rightly stated by Clemens Vasters. Basically, there are several sub-tasks and their corresponding compensation tasks which constitute a saga. If any of the steps/sub-tasks of the saga fails (or the saga is aborted), then the compensation tasks are executed (in an order) to take the system into an eventually consistent state. So, the main problem that this pattern solves is taking the system back into a consistent state in case of failures. Taking the well-known transaction example — Imagine you are trying to book a car, hotel and flight in the same transaction workflow and the flight reservation fails, would you like to keep the hotel and car? Absolutely not. The system should be able to rollback/compensate the steps for car and hotel reservation (cancel them). That’s what the saga pattern is all about — failure management. For more on Saga pattern, I recommend watching the video I mentioned earlier.

Azure Service Fabric Link to heading

Let’s look at Azure service fabric. A micro-services platform providing mainly two types of services — stateless and stateful. Micro-services pattern has been around for a long time but it has become famous recently because it solves a lot of problems for highly scale-able and available distributed systems. To understand service fabric basics, I recommend watching this Azure Fridays video.

The solution Link to heading

When we considered these stateless and stateful services provided by Azure Service Fabric, we found an excellent solution to maintaining a highly available and scale-able workflow and failure management system backed by the saga pattern. Let’s look at it in detail.

Let’s take an example workflow. We want to register a new user, in our IoT system, along with his devices. The typical steps would be,

1. Register the user with identity provider (external service call)

2. Insert the user in database (database op)

3. Register user’s devices (metadata) in the device management system (Azure IoT Hub)(service call)

4. Insert device metadata in the database (database op)

5. Authorize user and it’s devices in the system (service/database call)

Also, we would want to perform these steps in a logical order so that, in the case of a failure, the compensation of completed tasks is easy and less costly.

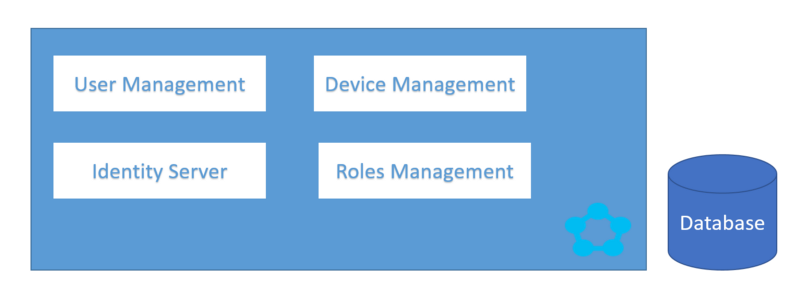

Let’s look at how we can implement this using saga pattern and Service Fabric reliable micro-services — We have micro-services dedicated for user, device, identity and role management. They are all stateful services.

To make sure that the workflow is completed, the most important thing is to maintain the state of the saga. This is where the reliable collections of stateful service can help us. More on this in one of the comments on this post.

This figure shows a simple service fabric cluster with different micro-services needed for the above workflow execution.

Happy Path Link to heading

The saga starts with the request coming to the user management service for user registration. It creates an entry in its database and passes the control and saga state to the identity management service by making an entry into its reliable collection. Once the identity management service completes its task it passes control and state to roles management service, and so on. This way the state and control passes through micros-services for execution of the workflow/saga.

uh-oh Link to heading

Now, lets consider a failure scenario — let’s assume that the service call for registration of devices in the device management system, failed. We wouldn’t want to add device metadata in the database, in this case, as the devices are not even registered. That’s when failure management comes into picture. Now, the compensation tasks for device registration will kick in and the system will be taken back into a consistent state.

Closing comments Link to heading

I believe, the combination of saga pattern and reliable collections is making easier is the management of multiple sagas running in parallel. All micro-services are independent of each other, efficient maintaining the saga state in their respective reliable collections and performing the steps at their own pace. Beautiful!

Following are some earlier comments and my responses on this post, migrated from Medium.

Rolf Szomor Link to heading

Thanks for the article, this is pretty useful for us (we exactly building sagas now with Azure SF). However there is an interesting topic that I simply cannot find information on (not even in other articles). So when a saga is running, lets say some calls are succeeded, lets say one call failed (even after retries), and lets say we are doing compensation. Now what happens if during compensation a concurrent update (a concurrent saga) is started on the very same domain entity? Is there any way to handle this other than actually not letting the “concurrent sagas to start before the first one finishes?

And if this is a proper solution at all, how can we accomplish that? We thought about putting “virtual locks” on our entities, so mark them in a so called “synchronization service”, and while one is “locked” (a long running transaction is in progress), we deny to start a new one on the same entity (delay or reject the request).

We think that is a very-very common scenario, and should be an essential question, we are wondering why there are not many articles about this aspect.

Many thanks in advance.

As far as I understand, Saga pattern only talks about failure management. You should approach failure management and concurrency management as two different problems. Concurrency management is a very common problem in distributed systems and locking is one of the industry preferred solutions. Here’s a nice article about distributed locking — https://martin.kleppmann.com/2016/02/08/how-to-do-distributed-locking.html

And once you have decided on an approach, then you can design a system where a saga activity can be equipped with concurrency management as well.

Bernd Rücker Link to heading

Thanks for sharing your thoughts! I actually missed the information how you exactly implemented the Saga in the end. Do you store some state in all services? How do you do the correlation at the end-of-the day and assure order?

I am a big fan of using lightweight workflow engines to do state persistence and Saga handling, like e.g. shown in this (Java based) example: https://github.com/flowing/flowing-trip-booking-saga/ (or some more thoughts here: https://blog.bernd-ruecker.com/saga-how-to-implement-complex-business-transactions-without-two-phase-commit-e00aa41a1b1b). Would that suite your environment as well?

Feel free to also contact me via mail if you like to discuss :-)

The correlation is done using a message id or a request id which flows across the services inside the activity parameters. The state is stored in the reliable collections (dictionaries in this case) which are inbuilt into the service fabric stateful micro-services framework.

I also read your linked blog post and as you’ve rightly mentioned that both (routing slip and process manager) can be correct implementations of the pattern.

In this case, we have the process manager implementation with some characteristics of routing slip — basically, one micro-service starts/initiates the saga but the state is transferred among the services using their respective reliable collections. In case of failures, the process manager service initiates the compensation. The process manager service is not always the same, it depends on where the saga is started — for instance it can be either user management service or message processing service or any other service.

Also, the system has gone through a lot of improvements since I wrote the post.

The ultimate goal is to bring the system back into a consistent state.